Multi-Mission CUxS Solutions

Precision-driven counter-drone solutions powered by unmatched AI and data superiority.

Dismounted

Built for the most demanding environments, DroneShield solutions deliver operational precision, system resilience, and layered defense at scale.

On-The-Move

Designed for rapid deployment and ease of use, on-the-move ensures swift threat detection and defense wherever the mission demands.

Fixed Site

Modular and scalable defense solution engineered for comprehensive threat management.

Software

Counter-UAS software powered by superior AI and data precision. Operators can detect, track, and defeat threats with a simple to use interface, providing true situational awareness for a decisive edge.

Layered

Defense

Modular and scalable layered defense solutions, built for seamless interoperability and mission-specific configurations.

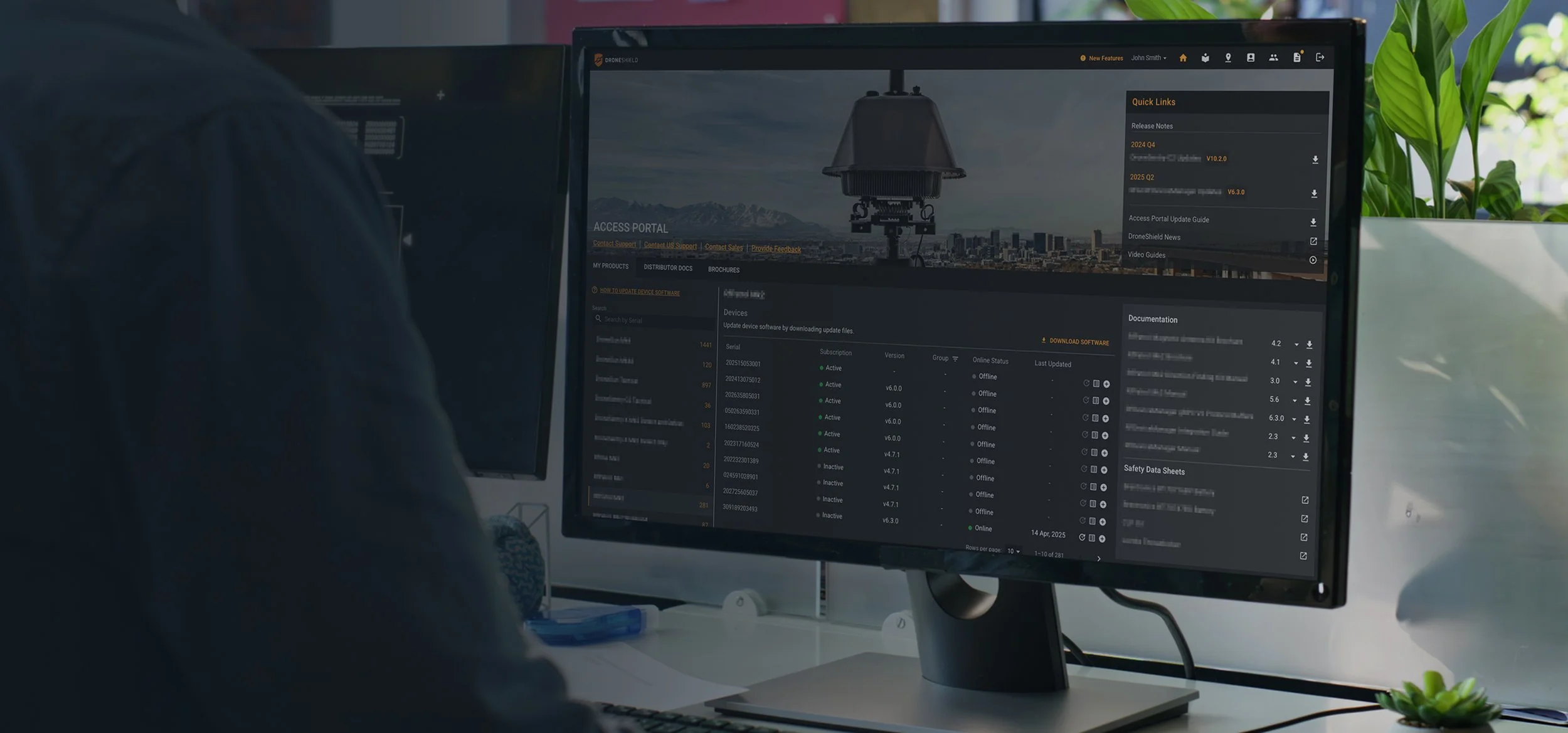

Access Portal

All critical resources, support, account tools, and mission planning features are accessible in one secure portal for DroneShield customers.

Product Support

Full customer tech support, access to training portal, and option to purchase training packages.

Training

Tailored Training Solutions

Expert training packages are customized to meet the unique needs of each team, ensuring maximum impact and efficiency.Comprehensive Curriculum

We offer a wide range of in-depth training modules, covering everything from basic to advanced topics, ensuring DroneShield users gain the skills they need to succeed.Hands-On Learning Experience

Our interactive, practical training approach helps participants apply new skills immediately, reinforcing learning through real-world scenarios.Flexible Delivery Options

Options include on-site instruction or live virtual sessions, tailored to fit varying schedules and learning preferences.Ongoing Support and Resources

Continued access to training materials, tutorials, and follow-up support through our customer portal ensures our users are proficient on the use of our systems.

Technical Support

On Demand Help

A team of expert tech support specialists is readily available to provide fast, reliable assistance for any technical challenges.Highly Trained

Support Staff

Our support team consists of certified specialists with extensive knowledge of our products, ensuring quick and accurate resolutions.Comprehensive Troubleshooting

Whether it’s a simple question or a complex issue, we provide detailed, step-by-step guidance to resolve any problem.Remote Assistance

& On-site Support

Both remote troubleshooting and on-site support options are available to provide maximum flexibility in addressing technical needs.Customer-Centric Solutions

Expert tech support focuses on delivering tailored solutions that align with specific requirements, ensuring satisfaction and continued success with the products.

CTA

Stay Ahead of Evolving Threats: Access our CUAS Factbook

Essential insights on counter-drone

technology and emerging threats